There are two ways to access to the cluster:

- command line access through ssh, with the client you like (terminal on Linux/Mac, putty or other clients in Windows);

- graphical mode using the ThinLinc client (instructions here).

ssh-access using a terminal requires a basic knowledge of UNIX commands. An advantage of ssh-access is that it does not require much bandwidth.

The ThinLinc client allows access to the HPC (and your data) through a Graphical User Interface, and can be easier to use. It requires a larger bandwidth, however.

Note:

- both methods provide a secure authentication mechanism, so they are both “safe”;

- Thinlinc can be used to run interactive jobs that need a graphical interface, and gives access to certain number of application nodes, that can be used to submit jobs to all the LSF-managed clusters.

- you can compare the ssh-fingerprints of our login-servers when you are logging into our systems the first time

- logging in from remote locations (without VPN connection) requires a ssh key authentication process, both for SSH and ThinLinc access, please follow these instructions

Our LSF 10-setup is based on Scientific Linux 7.9.

Command line: SSH access

Once you have a terminal emulator and an ssh-client installed on your own computer you have all you need to login to the system. It is installed by default on GNU-linux and MacOS, or through third party software like PuTTY, KiTTY, Cygwin, or similar on Windows. In the following we assume that you are using a terminal.

-

-

Access to the LSF 10 cluster (Scientific Linux 7.9)

At the prompt, type:

ssh userid@login1.gbar.dtu.dkorssh userid@login1.hpc.dtu.dkorssh userid@login2.gbar.dtu.dkorssh userid@login2.hpc.dtu.dkwhere

useridis your DTU username, andlogin1.hpc.dtu.dkis the login-node. When prompted write your password (some more information can be read here). Please DO NOT run any application here. -

You can then switch to another node (dynamically assigned by the system) typing

linuxsh

to have access to an interactive shell, that is meant for interactive jobs. From here, you can always access your data, start all the programs installed on the system and prepare/submit jobs.

Note: after issuing the linuxsh command, you end up on a shared node, be aware that there could be other users on that specific node.

-

Graphical Interface: ThinLinc

The procedure for accessing from DTU premise or remote locations are slightly different in the ThinLinc client.

Accessing from DTU premise (or VPN)

Start the application, and when prompted fill the three required fields:

Server: thinlinc.gbar.dtu.dk

Username: DTU userid

Password: DTU password

Remember that the userid and password are case-sensitive. You can also have a look at the options to customize the program behaviour.

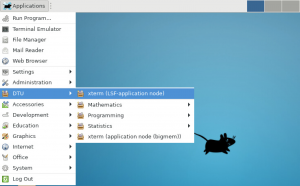

After the log-in, ThinLinc provides you with a traditional desktop interface. The default is the Xfce window manager. Click on the Applications menu button (top left corner) or right-click on the desktop to activate menus from where you can choose the application you need, and the terminal.

ThinLinc Xfce Screenshot

If you want to access one of the application nodes, to run programs interactively or to submit jobs to one of the LSF clusters, simply open a terminal, or Applications Menu -> DTU-> xterm (LSF-application node), as shown in the screenshot above.

Accessing from remote locations (without VPN)

DTU requires a 2-factor authentication which we provide through the use of SSH keys.

If you do not have an SSH key on the HPC infrastructure, please follow these instructions for creating one, do note that you HAVE to specify a password (non-passworded keys will not be accepted by ThinLinc).

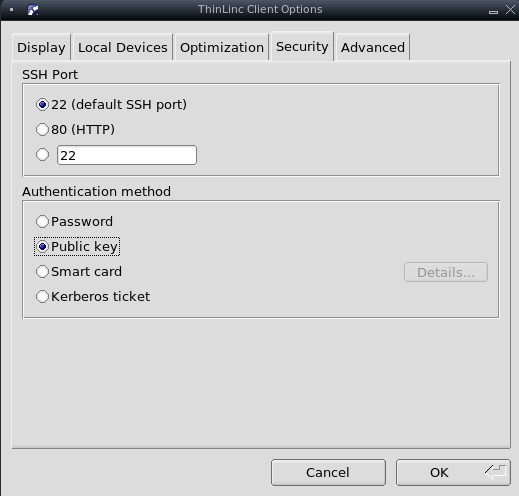

Now change the authentication security in ThinLinc:

Options -> Security -> Public key

This will change the access prompt to:

Server: thinlinc.gbar.dtu.dk

Username: DTU userid

Key: path to ssh key

When logging in you will be prompted first for the ssh-key password.